Background

The leadership team at Sydney Airport were eager to implement an Open AI use case that would increase the organisation’s AI maturity and ultimately develop the foundations to offer the solution to the wider user community.

We identified a suitable use case that would deliver value in a controlled environment:

HR BOT – an intelligent virtual agent designed to answer HR policy-related questions asked by employees.

Where We Started

HR time is allocated to mundane tasks

Employees need to search multiple policies to find the answers

HR questions could only be answered during business hours

Limited knowledge of Open AI technology

Lack of processes to govern Open AI

What We Set Out to Achieve

Adopt Open AI Technology

Learn the art of Open AI and how to implement high quality outcomes.

Efficiency and Accuracy

Provide quick and accurate responses to HR policy-related questions. Allow HR personnel to focus on more complex and high-priority tasks.

Employee Satisfaction

Improve Employee engagement by offering them access to quick responses at any time.

Mature AI processes

Leverage this use case to enable the organisation to mature the processes that govern and manage AI.

Enabling the organisation to adopt Open AI

Uncertainty on Open AI and what it offers

POC Implementations that build knowledge & confidence

Foundations established to enable roll out to community

Our Approach

Developing our knowledge

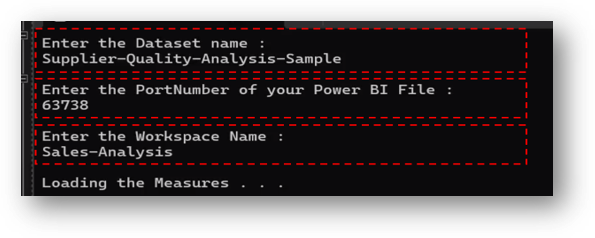

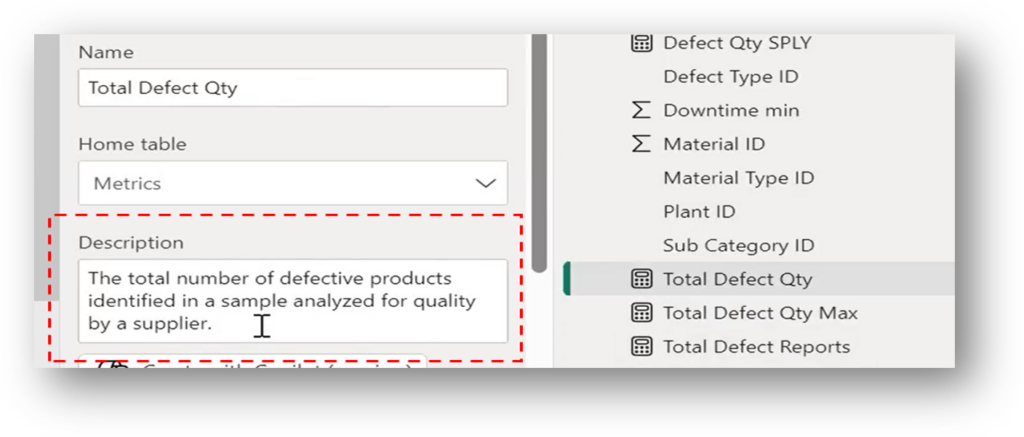

We initially enhanced our knowledge by developing another solution that analysed Power BI DAX expressions and automatically generated business definitions. This improved self-service and understanding of reports/metrics.

Open AI Playground

Open AI Playground was a great way to start the development process. The simple GUI interface and helpful features made it easy to generate the foundational code with 50-60% accuracy.

Collaborating with Microsoft

Not only did Microsoft provide ECIF funding, but they also provided critical expertise to help deliver a high-quality solution

Iteratively refining and improving

By refining the way we framed our questions and providing more context, we could guide the AI to deliver more precise and relevant answers. This iterative process became a critical part of our development cycle.

Power BI Metric Description Automation

Users provide the details of the Dataset and Workspace

Open AI automatically generates a business description for all the identified metrics

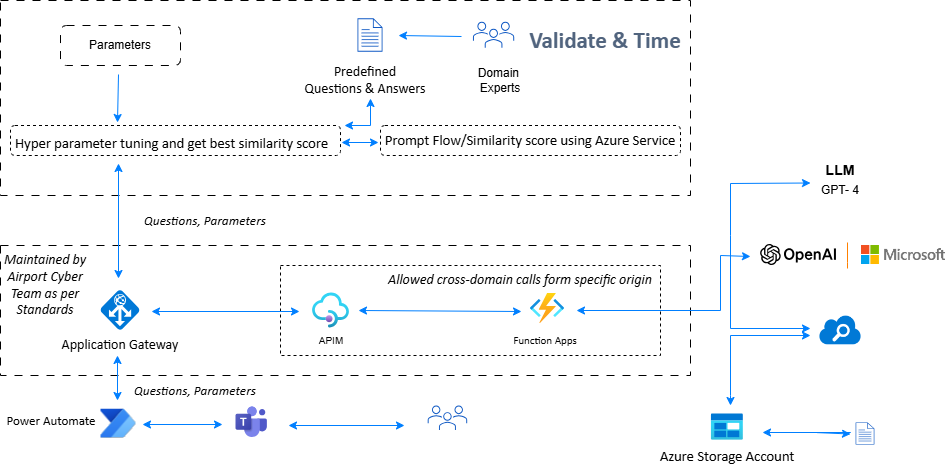

Solution Architecture

Opportunities for Improvement

Power Virtual Agent – Initiating Conversations without Trigger Phrases

The client requested the ability to ask questions directly without a trigger phrase. Currently, it is not possible to ask questions without a greeting.

Power Virtual Agent – Handling Optional Feedback Buttons

Optional buttons, such as ‘Like,’ ‘Dislike,’ or ‘Retry,’ become mandatory if included in the conversation flow. Should be made optional.

Indexing PDF Files in AI Search

Suggestion to introduce a quick mode features, similar to those of AI Playground. For example, during indexing, the playground automatically populates these values.

Enhancing the Teams PVA

Introduce seamless chat UI, similar to AI Playground, within the Teams PVA would be beneficial. For Example:Optional” feedback buttons,Clear Chats,Ability to add reference Links.

AI Search indexer refresh

Currently the maximum reschedule schedule available is 24 hours. We had to build a custom ADF pipeline that calls four APIs to enable a weekly refresh (reducing the cost to the client).

SDKs consistency

Python SDKs offer more configuration options compared to the C# SDKs. Since the existing function apps in the client environment were built in C#, we had to continue using them. Switching to Python would have required maintaining a new app service.

Latency Improvement

We developed a Teams virtual agent using Power Automate, interacting with OpenAI via our API endpoint. Some questions experienced a latency of up to 20 seconds, hindering the chat-like experience. We anticipate a significant reduction in latency with access to the GPT-4o model.

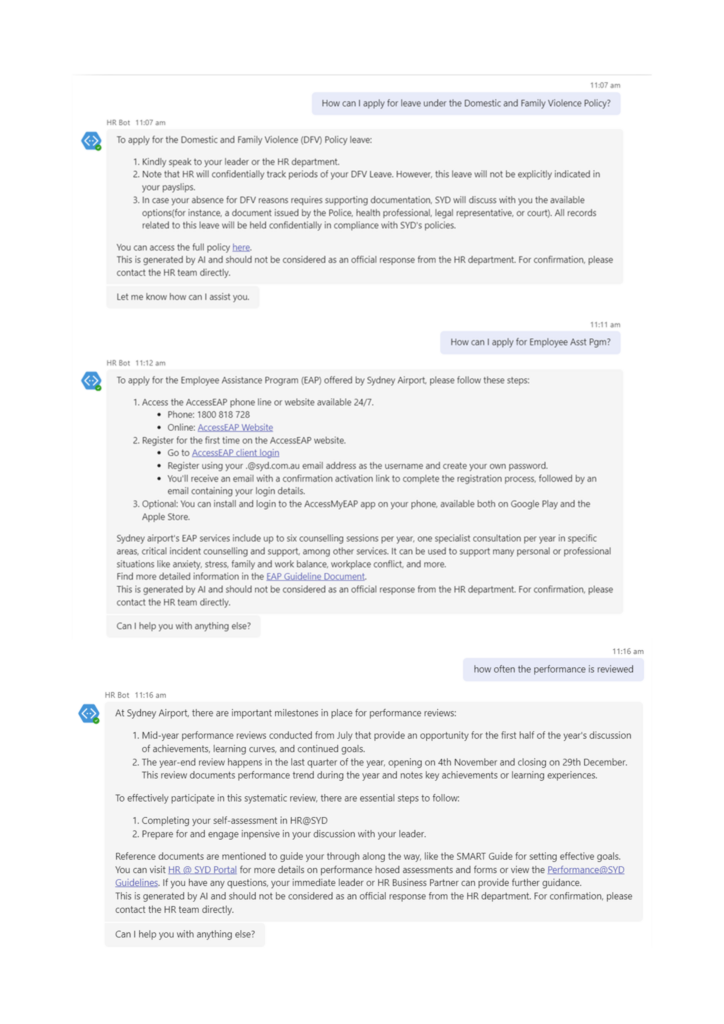

HR BOT IN ACTION

Collaborating With Microsoft

Hallucination Management

Ensuring 100% accuracy in responses was crucial. Microsoft informed us about prioritising instructions at the end of prompts. By placing a “do-not-hallucinate” directive towards the end, we ensured that the system would acknowledge when it didn’t have an answer, advising users to contact HR.

Model Transition

With rapid advancements in Large Language Models (LLMs), we transitioned from GPT-3.5 to GPT-4 and are now anticipating the GPT-4 Omni model.

Automated Question Generation and Testing

Leveraging LlamaIndex, we automated the generation of questions, creating a master set of around 60. These questions were manually answered, and then synthesized to compare AI-generated responses against human-generated ones.

Hyperparameter Optimisation

We experimented with various parameters like temperature (adjusting between 0 to 1, where a value closer to 1 reduces hallucination), top N documents, and top P (where increasing P reduces word reuse) to improve response accuracy

Tone Adaptation

By incorporating dynamic prompts, we tailored responses to fit the context. For instance, queries related to leave policies required a different tone than those related to grievances.

Best Practices for RAG (Retrieval-Augmented Generation)

We incorporated feedback from Microsoft which suggested that instead of having OpenAI interact directly with AI Search, it is better to first retrieve the information from AI Search and then have OpenAI use that information to answer the question.