This blog will show you how to load tables from snowflake to the S3 bucket and then load them into SFTP (Secure File Transfer Protocol) using matillion ETL tool. There are two methods to do this,

- Loading data from snowflake to S3 bucket and to SFTP by using the data transfer component

- Loading data from snowflake to S3 bucket and to SFTP by using python script

In both methods for loading data from Snowflake to SFTP, there are two steps:

Step 1:Unloading data from snowflake to S3 bucket(stage)

A user can load data from any source into an S3 bucket. Assume that there is a table in the snowflake. Using the S3 unload component, users can load data from the Snowflake table as a file in any format.

Step 2:Loading file from S3 bucket to SFTP

For loading files from the s3 bucket to SFTP user can use the data transfer component or python script. The files to be uploaded into the s3 bucket can be in any format, such as CSV, JSON, text, images, movies, and so on.

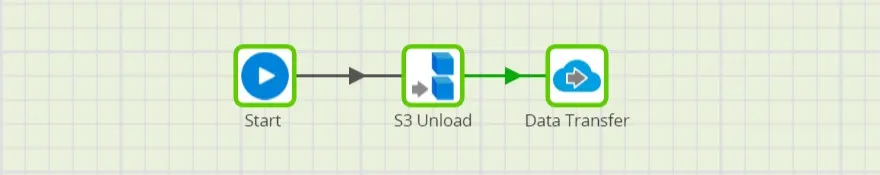

Method 1: Job for loading data from snowflake to SFTP by using data transfer component

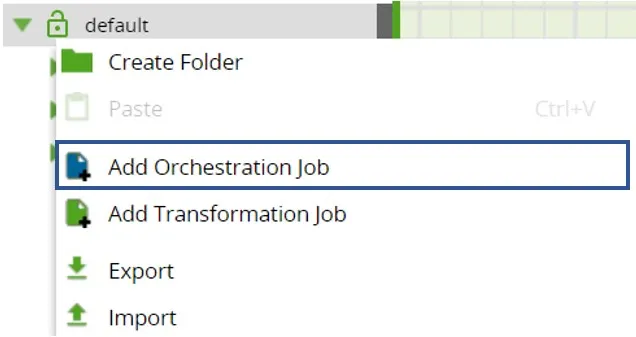

1. In matillion, add a new orchestration job by right-clicking on default and choosing ‘Add Orchestration Job’.

2. Drag and drop the ‘S3 unload’ component from components onto the canvas, attaching it to the ‘Start’ component.

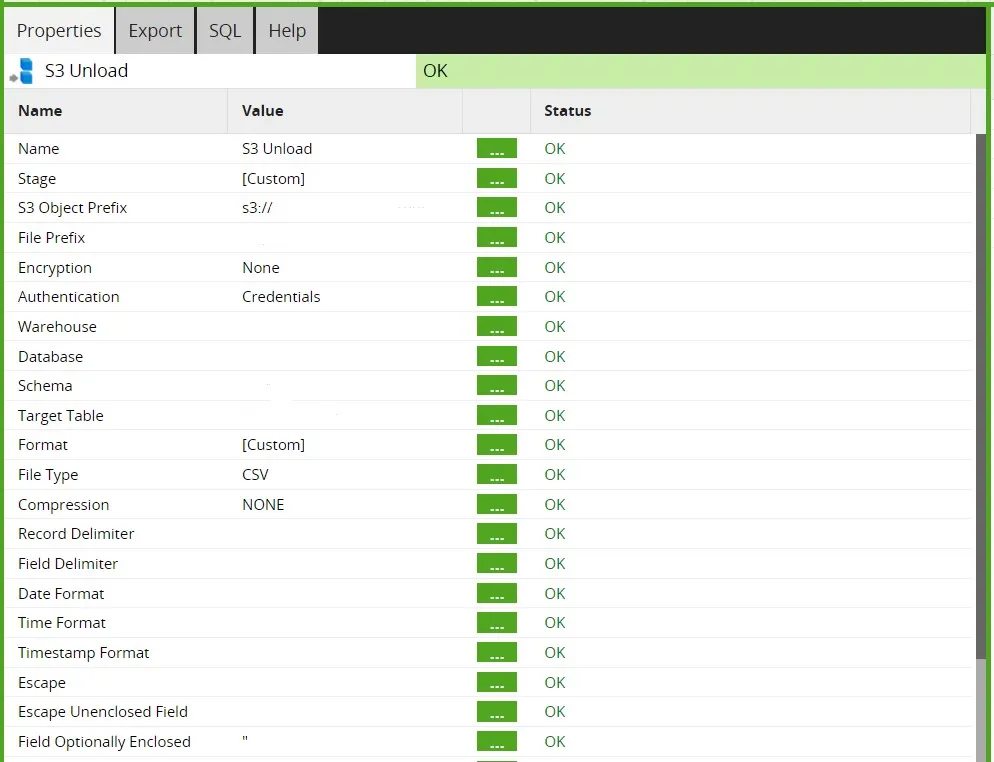

S3 Unload component: Creates files on a specified S3 bucket, and loads them with data from a table or view

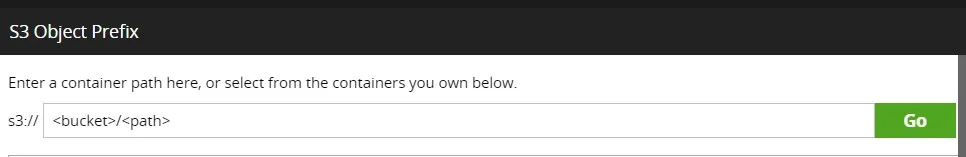

a)In the S3 object prefix specify the file path

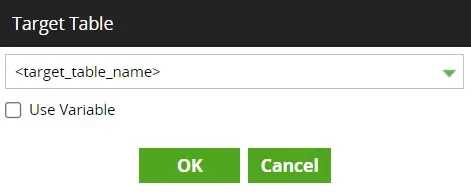

b)In the target table specify the table to unload to S3

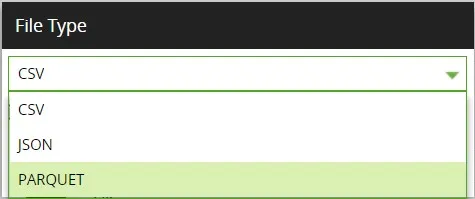

c)In File type choose the required file format. Matillion supports various file types like CSV, JSON, and PARQUET.

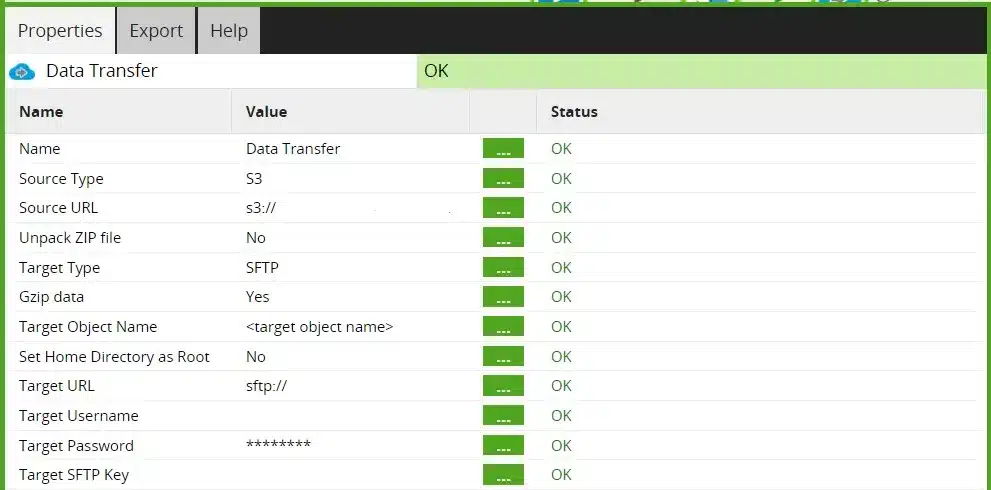

Data Transfer component: It allows users to transfer files from a specified source to a specified destination. Use the Data Transfer component to load files from S3 to SFTP and specify the source and target URLs as well as the file type. Users must provide credentials for SFTP to load files into SFTP.

a)In Source type specify the type of data source

b)In Target Type Select the target type for the new file.

Now, completed the component setup for loading data from snowflake to SFTP using the Data transfer component.

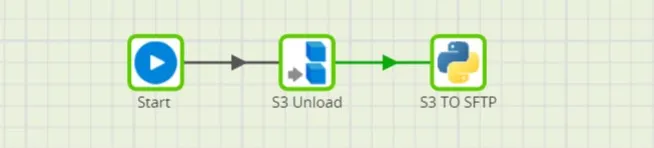

Method 2:Job for loading data from snowflake to SFTP by using python script

Instead of using Data transfer to load data from snowflake to SFTP, a python script can also be used.

In the python script by using the below python code user can transfer the file from the S3 bucket to SFTP.

import boto3

import pysftp

client = boto3.client(‘s3’)

s3 = boto3.resource(‘s3’)

s3_bucket=s3.Bucket(‘<bucket_name>’)

key='<file_path>’

res = s3.Object(‘<bucket_name>’,'<file_path>’)

cnopts = pysftp.CnOpts()

cnopts.hostkeys = None

srv = pysftp.Connection(host=”<host>”,username=”<username>”,password=<password>”,cnopts=cnopts)

with srv.open(“/<sftp_path>/<file_name>”,”wb”) as f:

client.download_fileobj(‘<bucket_name>’,key,f)

For example:

If your file path in the s3 bucket is like, Bucket_name_s3/folder_name/sub_folder_name/file.csv

Then, Your bucket name should be: Bucket_name_s3

Your file path should be like: folder_name/sub_folder_name/file.csv

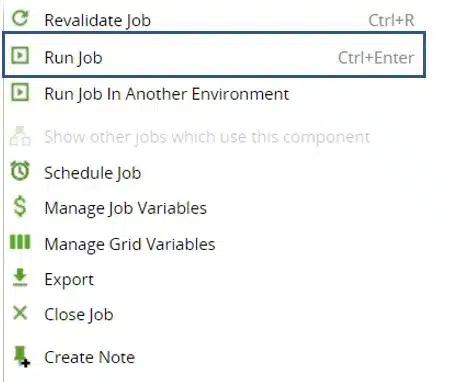

Finally, for both the methods run the job by right-clicking on the canvas and clicking ‘run job’ as shown above. Now the data will be successfully loaded from snowflake to SFTP.

The Jobs created in this blog enable users to load data from snowflake to SFTP using two different components i.e Data transfer and python script component. Similar to this process we can also unload data from snowflake to Azure container and GCP cloud storage container.