This blog demonstrates how to encrypt a file in AWS S3 using the C# language in a AWS Lambda function. To do this, we need a PGP public key and private key to encrypt and decrypt a file respectively.

Here are the simple five steps that will help us get there.

1.Create an AWS function in Visual Studio.

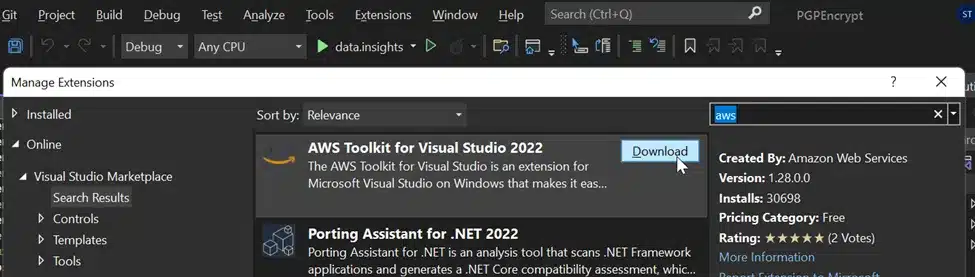

AWS Toolkit will not be available in Visual Studio by default. We must install it by navigating to EXTENSIONS -> MANAGE EXTENSIONS

Download Toolkit in Visual Studio

If you have any problem in downloading the AWS Toolkit. Try to install the Latest version of the Visual Studio

After installation, restart your application and reopen it,

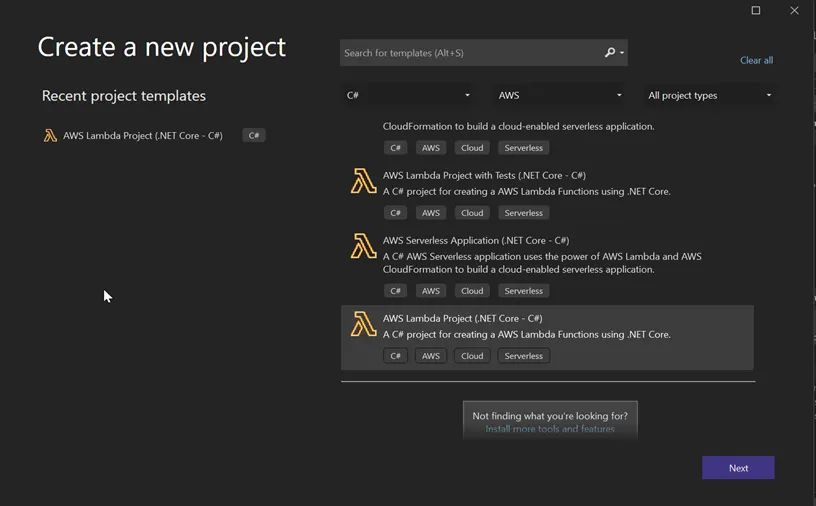

- Select AWS Lambda function (.NET Core) .

- Enter a Project Name and Location to save your project before clicking ‘Next’.

Creating new AWS Project

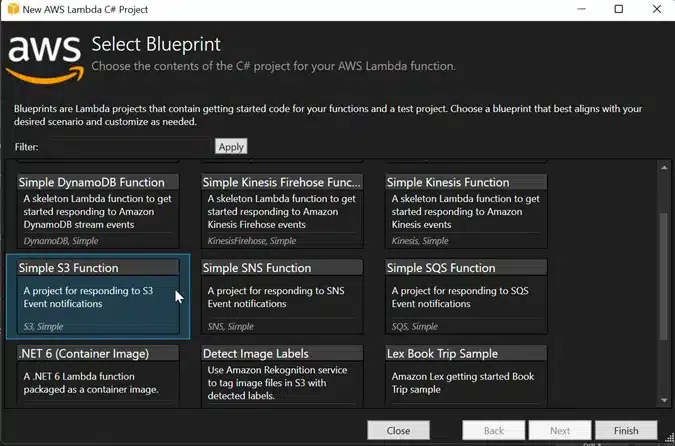

- Choose the Simple S3 function from the Blueprints and finally click ‘Finish’ to create a new project.

Simple S3 Function

2. Configure Visual studio to AWS account

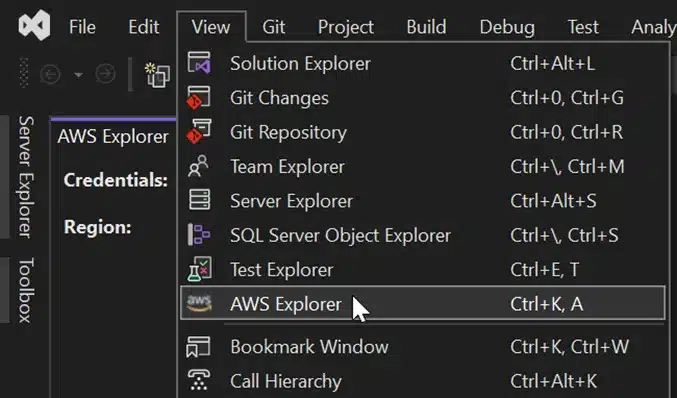

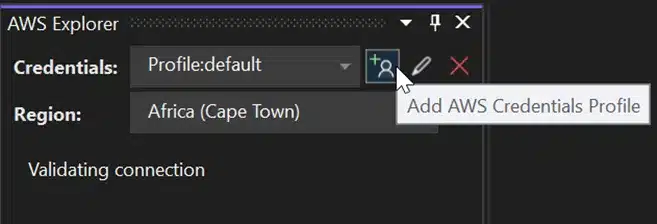

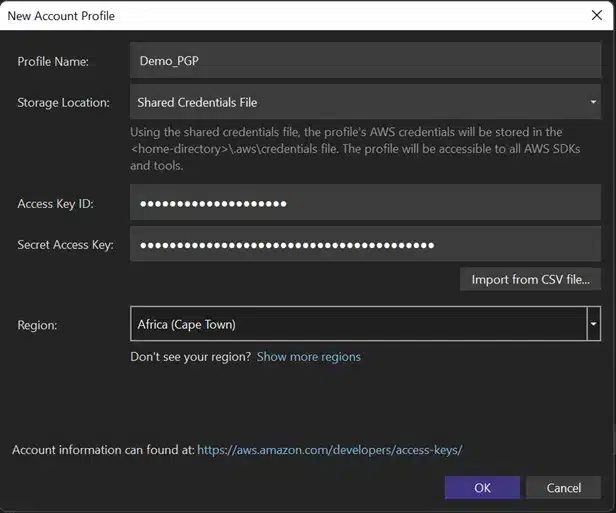

- In the view, select AWS Explorer.

Choose AWS Explorer

- Create an AWS Credentials Profile so that we can use the AWS services that are available.

Create a User Profile

Give the profile a name, as well as the access and secret keys that you can get from your AWS account. Give the respective region and click ‘OK’. For more details visit

https://docs.aws.amazon.com/powershell/latest/userguide/pstools-appendix-sign-up.html

Create New Profile Account

The file is encrypted using the public key and decrypted with the private key.

The following links will provide you with a sample key which I have generated. You can also generate a demo key from the internet.

Public key : https://textdoc.co/ksVyajNlzWQOGi5S

Private key : https://textdoc.co/BLJovT06ZlFmcdxG

3. Code for Encrypting the file

The NuGet Package Manager will have the packages listed in the code. Download those packages before executing the code.

To Download go to PROJECT -> MANAGE NUGET PACKAGES.

NuGet Package Manager

After downloading necessary packages, Create a file with a name Function.cs and paste the following code. This code is to take a file from the S3 and store it in Temp Location and Encrypt it using the public key.

using PgpCore;

using System;

using System.IO;

using Amazon.Lambda.Core;

using Amazon.Lambda.S3Events;

using Amazon.S3;

using System.Threading.Tasks;

using Amazon.S3.Model;

using Amazon.S3.Util;

using System.Text;

// Assembly attribute to enable the Lambda function’s JSON input to be converted into a .NET class.

[assembly: LambdaSerializer(typeof(Amazon.Lambda.Serialization.SystemTextJson.DefaultLambdaJsonSerializer))]

namespace pgp_encrypt_s3;

public class Function

{

IAmazonS3 S3Client { get; set; }

/// <summary>

/// Default constructor. This constructor is used by Lambda to construct the instance. When invoked in a Lambda environment

/// the AWS credentials will come from the IAM role associated with the function and the AWS region will be set to the

/// region the Lambda function is executed in.

/// </summary>

public Function(){

S3Client = new AmazonS3Client();

}

/// <summary>

/// Constructs an instance with a preconfigured S3 client. This can be used for testing the outside of the Lambda environment.

/// </summary>

/// <param name=”s3Client”></param>

public Function(IAmazonS3 s3Client)

{

this.S3Client = s3Client;

}

/// <summary>

/// This method is called for every Lambda invocation. This method takes in an S3 event object and can be used

/// to respond to S3 notifications.

/// </summary>

/// <param name=”evnt”></param>

/// <param name=”context”></param>

/// <returns></returns>

public async Task<string?> FunctionHandler(S3Event evnt, ILambdaContext context)

{

var s3Event = evnt.Records?[0].S3;

if(s3Event == null)

{

return null;

}

try

{

var response = await this.S3Client.GetObjectMetadataAsync(s3Event.Bucket.Name, s3Event.Object.Key);

PGP pgp = new PGP();

string BucketName = s3Event.Bucket.Name;

string InputFileName = s3Event.Object.Key;

string OutputFileName = InputFileName + “.pgp”;

GetObjectRequest getObjRequest = new GetObjectRequest

{

BucketName = BucketName,

Key = InputFileName

};

GetObjectResponse getobjresponse =await this.S3Client.GetObjectAsync(getObjRequest);

StreamReader reader = new StreamReader(getobjresponse.ResponseStream);

string content = reader.ReadToEnd();

var Base64PublicKey = Environment.GetEnvironmentVariable(“Base64PublicKey”);

// use UTF8 encoding type to decode base64 string

byte[] base64EncodedBytes = Convert.FromBase64String(Base64PublicKey);

string PublicKey = Encoding.UTF8.GetString(base64EncodedBytes);

byte[] byteArray = Encoding.ASCII.GetBytes(PublicKey);

MemoryStream publicKeyStream_ = new MemoryStream(byteArray);

//Stream publicKeyStream_ = new FileStream(@Path.Combine(Path.GetTempPath(), “PUBLIC.asc”), FileMode.Open);

using (GetObjectResponse getObjRespone = await this.S3Client.GetObjectAsync(getObjRequest))

using (Stream getObjStream = getObjRespone.ResponseStream)

{

using (Stream outputFileStream = File.Create(System.IO.Path.GetTempPath() + “\\” + OutputFileName))

{

pgp.EncryptStream(getObjStream, outputFileStream, publicKeyStream_, true, true);

}

}

AmazonUploader myUploader = new AmazonUploader();

myUploader.sendMyFileToS3(System.IO.Path.GetTempPath() + “\\” + OutputFileName, BucketName, “”, OutputFileName);

return “Success”;

}

catch(Exception e)

{

context.Logger.LogInformation(e.Message);

context.Logger.LogInformation(e.StackTrace);

throw;

}

}

}

Code for Encrypt the file

Create a file with a name AmazonUploader.cs, This code is to load the encrypted file from the Temp Location back to the S3 Bucket.

using System;

using System.Collections.Generic;

using System.Text;

using Amazon;

using Amazon.S3;

using Amazon.S3.Transfer;

namespace pgp_encrypt_s3

{

class AmazonUploader

{

IAmazonS3 S3Client { get; set; }

public AmazonUploader()

{

S3Client = new AmazonS3Client();

}

public AmazonUploader(IAmazonS3 s3Client)

{

this.S3Client = s3Client;

}

public bool sendMyFileToS3(string localFilePath, string bucketName, string subDirectoryInBucket, string fileNameInS3)

{

// input explained :

// localFilePath = the full local file path //e.g.”c:\mydir\mysubdir\myfilename.zip”

// bucketName : the name of the bucket in S3 ,the bucket should be alreadt created

// subDirectoryInBucket : if this string is not empty the file will be uploaded to

// a subdirectory with this name

// fileNameInS3 = the file name in the S3

// create an instance of IAmazonS3 class ,in my case i choose RegionEndpoint.EUWest1

// you can change that to APNortheast1 , APSoutheast1 , APSoutheast2 , CNNorth1

// SAEast1 , USEast1 , USGovCloudWest1 , USWest1 , USWest2 . this choice will not

// store your file in a different cloud storage but (i think) it differ in performance

// depending on your location

// string bucketName = <bucketName>;

//IAmazonS3 client = new AmazonS3Client(awsAccessKey, awsSecretKey, RegionEndpoint.APSoutheast2);

// create a TransferUtility instance passing it the IAmazonS3 created in the first step

TransferUtility utility = new TransferUtility(this.S3Client);

// making a TransferUtilityUploadRequest instance

TransferUtilityUploadRequest request = new TransferUtilityUploadRequest();

if (subDirectoryInBucket == “” || subDirectoryInBucket == null)

{

request.BucketName = bucketName; //no subdirectory just bucket name

}

else

{ // subdirectory and bucket name

request.BucketName = bucketName + @”/” + subDirectoryInBucket;

}

request.Key = fileNameInS3; //file name up in S3

request.FilePath = localFilePath; //local file name

utility.Upload(request); //commensing the transfer

return true; //indicate that the file was sent

}

}

}

Load File to AWS S3

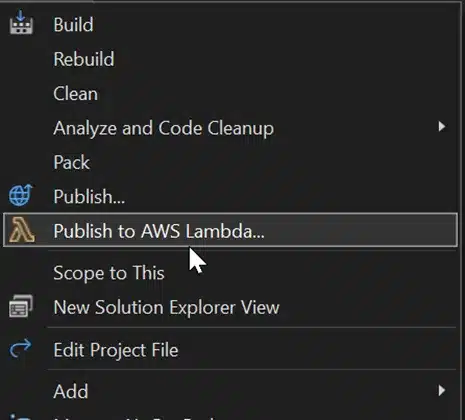

4. Upload code in Lambda Function

- By right clicking on the Project in the Solution Explorer, there is a option to publish to AWS Lambda.

Publish to AWS Lambda

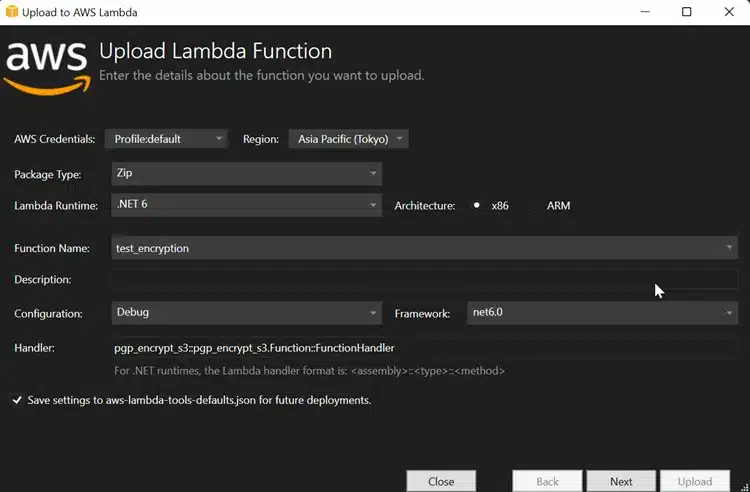

- To configure your code with an AWS Lambda function, fill the required details.

- Function Name to which the code will be deployed under that function in AWS.

Upload details of the Lambda function

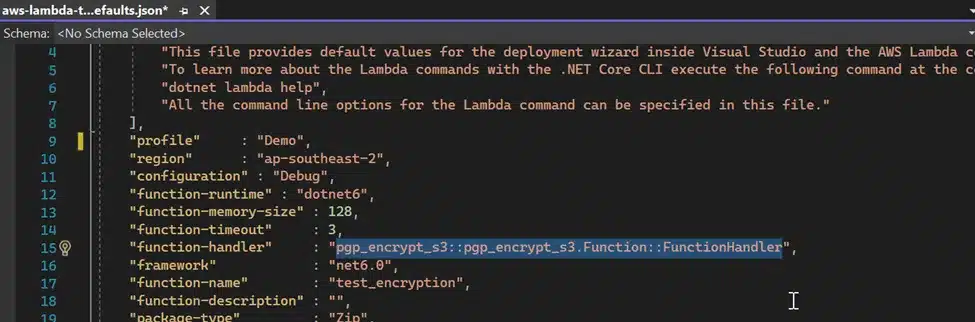

- The handler name will be available in the “aws-lambda-tools-defaults.json”. The file will be available if you choose the blueprint as ‘Simple-S3-Function’.

Function-Handler

- After the code deployment is done, either we can test in the visual studio itself or we can move to the respective lambda function to trigger the function.

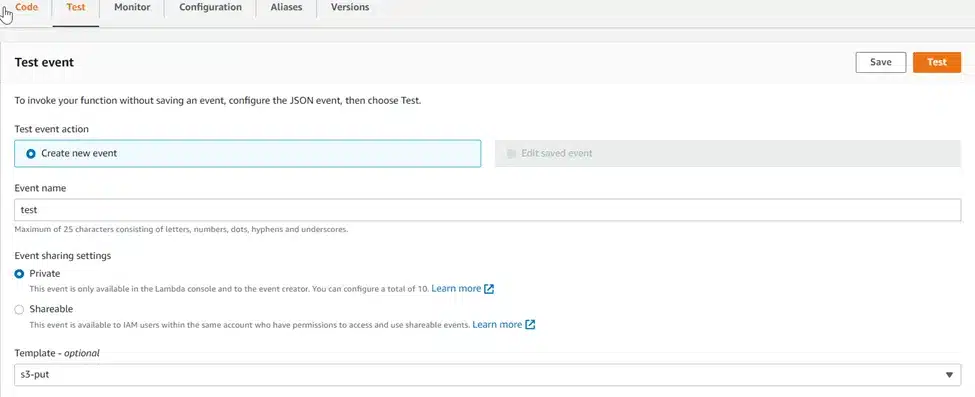

S3-put Event

- Here, before we run the lambda function, we need to create an event with the template s3-put. By default, it will provide a template. We should modify it with the details that we have. Below is the sample S3-Put event in JSON format.

{

“Records”: [

{

“eventVersion”: “2.0”,

“eventSource”: “aws:s3”,

“awsRegion”: “us-east-1”,

“eventTime”: “1970–01–01T00:00:00.000Z”,

“eventName”: “ObjectCreated:Put”,

“userIdentity”: {

“principalId”: “EXAMPLE”

},

“requestParameters”: {

“sourceIPAddress”: “127.0.0.1”

},

“responseElements”: {

“x-amz-request-id”: “EXAMPLE123456789”,

“x-amz-id-2”: “EXAMPLE123/5678abcdefghijklambdaisawesome/mnopqrstuvwxyzABCDEFGH”

},

“s3”: {

“s3SchemaVersion”: “1.0”,

“configurationId”: “testConfigRule”,

“bucket”: {

“name”: “PGP_Encrypt_Bucket”,

“ownerIdentity”: {

“principalId”: “EXAMPLE”

},

“arn”: “arn:aws:s3:::PGP_Encrypt_Bucket”

},

“object”: {

“key”: “test_file.txt”,

“size”: 1024,

“eTag”: “0123456789abcdef0123456789abcdef”,

“sequencer”: “0A1B2C3D4E5F678901”

}

}

}

]

}

Since AWS Lambda functions do not support inline code editors for C# by default, if we have any changes, we must change the code in Visual Studio and re-upload it to test. Finally click Test run the code.

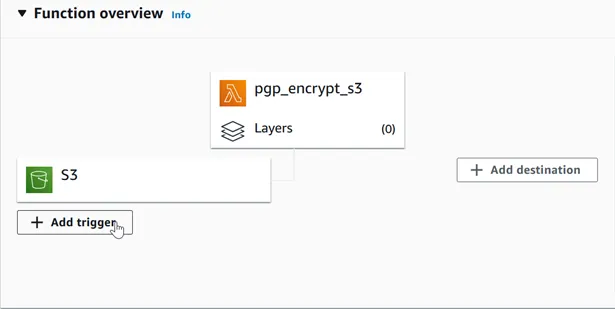

5. Add Trigger to the Lambda Function

- Click +Add trigger to add a trigger to the Lambda Function.

Adding event trigger to Lambda

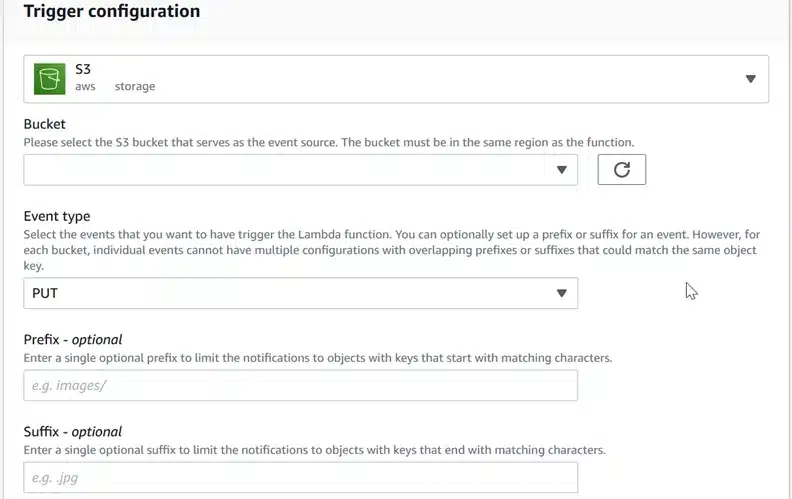

- Once it is clicked we need to configure it to the event type.

- Optional: Prefix and Suffix is used to manage trigger for selected file. If we gave suffix as ‘.csv’, It only trigger if the CSV file falls on the particular bucket.

Trigger configuration

Finally, this will create an AWS event trigger. When a file falls into the appropriate bucket, it will take the filename and use the public key we have to encrypt the file and save the encrypted file as filename.pgp.

Similarly for decrypting the file, Use “DecryptStream”

Stream privateKeyStream = new FileStream(@Path.Combine(Path.GetTempPath(), “private.asc”), FileMode.Open);

string passPhrase = “Test_PGP”;

var plainText = System.Text.Encoding.UTF8.GetBytes(passPhrase);

String Pk = System.Convert.ToBase64String(plainText);

byte[] decodedPassPhraseData = System.Convert.FromBase64String(Pk);

base64DecodedPhassPhrase = System.Text.ASCIIEncoding.ASCII.GetString(decodedPassPhraseData);

Stream DC = new FileStream(System.IO.Path.GetTempPath() + “\\” + encryptedFileName + “.pgp”, FileMode.Open);

using (Stream outputFileStream = File.Create(System.IO.Path.GetTempPath() + “\\” + srcFileName + ” decrypted.txt”))

{

pgp.DecryptStream(DC, outputFileStream, privateKeyStream, base64DecodedPhassPhrase);

}

Decrypt the file

I hope this article will help you to encrypt and Decrypt the file using PGP keys.